xunit, testcontainers, and .NET

Setting up realistic test environments with Testcontainers for reliable testing scenarios

Unit tests leave much to be desired when writing tests for a server linked to a database. I wanted an easy way to test a server to improve maintainability and the velocity of shipping. After evaluation of available options, I chose to write tests using xunit.v3 and spinning up dependencies like Postgres and Redis using testcontainers.

Test runner; run the tests

[assembly: AssemblyFixture(typeof(CryptlexWebApiFixture))]

public class WebApiFixture : WebApplicationFactory<Program>, IAsyncLifetime

{

/// <summary>

/// Testcontainer for the postgres database.

/// </summary>

private PostgreSqlContainer postgreSqlContainer = new PostgreSqlBuilder()

.WithImage("postgres:14.12")

.WithPortBinding(5432)

.Build();

/// <summary>

/// State to be kept through the execution of all tests

/// </summary>

public State State { get; set; } = new State();

protected override void ConfigureWebHost(IWebHostBuilder builder){}

public async ValueTask InitializeAsync()

{

await postgreSqlContainer.StartAsync();

Environment.SetEnvironmentVariable("DATABASE_URL", postgreSqlContainer.GetConnectionString());

Environment.SetEnvironmentVariable("DATABASE_READERURL", postgreSqlContainer.GetConnectionString());

}

public new async Task DisposeAsync()

{

await postgreSqlContainer.DisposeAsync();

}

}

public class State

{

public string? AccessToken { get; set; }

public string? RefreshToken { get; set; }

public string? TenantId { get; set; }

public string Email { get; } = "[email protected]";

public string Password { get; } = "test_password";

}The AssemblyFixture in xunit.v3 has been a life-saver for having singletons across the test runner. The following is the standard setup for a WebApiFixture modified to our requirements.

using Xunit.Internal;

[assembly: CollectionBehavior(DisableTestParallelization = true)]

[assembly: TestCollectionOrderer(typeof(CollectionDisplayNameOrderer))]

[assembly: TestCaseOrderer(typeof(TestAlphabeticalOrderer))]

public class CollectionDisplayNameOrderer : ITestCollectionOrderer

{

public IReadOnlyCollection<TTestCollection> OrderTestCollections<TTestCollection>(IReadOnlyCollection<TTestCollection> testCollections) where TTestCollection : ITestCollection => testCollections.OrderBy(collection => collection.TestCollectionDisplayName).CastOrToReadOnlyCollection();

}

public class TestAlphabeticalOrderer : ITestCaseOrderer

{

public IReadOnlyCollection<TTestCase> OrderTestCases<TTestCase>(IReadOnlyCollection<TTestCase> testCases) where TTestCase : notnull, ITestCase => testCases.OrderBy(testCase => testCase.TestMethod?.MethodName).CastOrToReadOnlyCollection();

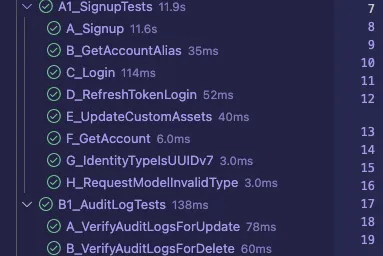

}In this project, both Collections and TestCases are run in alphabetical order, sequentially. Parallel execution of tests is turned off since that model of running tests is not compatible with having a single database instance.

Abstracting away basic CRUD tests

/// <summary>

/// Factory for generic Faker instance which takes attributes into account.

/// </summary>

static public Faker<T> New<T>() where T : class

{

var faker = new Faker<T>("en").StrictMode(true);

Type type = typeof(T);

// Use reflection to check properties in model and populate based on rules.

foreach (PropertyInfo property in type.GetProperties(BindingFlags.Public | BindingFlags.Instance))

{

if (!property.CanWrite || !property.CanRead)

{

continue;

}

Type propertyType = property.PropertyType;

// NOTE: Rules should go from specific to generic

// Specific rules

EmailAddressAttribute? emailAddressAttribute = property.GetCustomAttribute<EmailAddressAttribute>();

if (emailAddressAttribute != null)

{

faker.RuleFor(property.Name, f => f.Internet.Email());

continue;

}

// Generic rules

// Handle boolean values

if (propertyType == typeof(bool) || propertyType == typeof(bool?))

{

faker.RuleFor(property.Name, f => f.Random.Bool());

continue;

}

}

return faker;

}With the generic reflection-based Faker, CRUD operations can be done easily without having to define any of the parameters. This also serves as a good test for how well the [Attributes] handle the inputs since the Faker can add some flakiness to the tests.

public abstract class CrudIntegrationTest<TCreateRequestModel, TUpdateRequestModel, TResponse>

where TReponse : class, new()

where TCreateRequestModel : class, new()

where TUpdateRequestModel : class, new()

{

public string _endpoint { get; set; }

public static string? _id { get; set; }

public int _expectedCount { get; set; } = 1;

private readonly ITestOutputHelper _output;

private Faker<TCreateRequestModel> CreateModelFaker { get; set; }

private Faker<TUpdateRequestModel> UpdateModelFaker { get; set; }

/// Create overrides to the generic Faker created, if required

protected abstract void ConfigureCreateModelFaker(Faker<TCreateRequestModel> faker);

protected abstract void ConfigureUpdateModelFaker(Faker<TUpdateRequestModel> faker);

[Fact]

public async Task A100_Create()

{

// POST ${endpoint}

// Logic for checking create requests made by Faker

// Sets the string id property on the class

}

[Fact]

public async Task A200_Update()

{

// PATCH ${endpoint}/${id}

// Mock update using faker

// Updates the resource with 'id' created in A_Create

}

[Fact]

public async Task A300_ListAll()

{

// GET ${endpoint}

// Since the database is new, validate against int _expectedCount

}

[Fact]

public async Task A400_Get()

{

// GET ${endpoint}/${id}

}

[Fact]

public async Task A500_Delete()

{

// DELETE ${endpoint}/${id}

}

}With how the CrudIntegrationTest abstract class is written, tests that inherit the class will always run a series of CRUD actions on the endpoint provided in the constructor. This makes authoring a basic set of tests very easy for endpoints that behave similarly, which was most of the code-base in this case.

Furthermore, having a running instance of the production server, database, and Faker helped me test locale support, new database versions, and SQL query stability across ORM upgrades.

Next steps

- While this worked well for a monolithic system, I'm not sure how well this approach works for a microservice architecture.

- Intuitively, I feel like there has to be a point where the testcontainers set-up becomes impractical/limited compared to a conventional Docker Compose based runner.